Client: Ironsmith, a tree grate manufacturer located in Palm Desert, CA.

Project Background

Moving from an old application to a new one comes with an additional concern, what to do with all the existing data. How do you migrate the data to the new system when there will be fundamental changes to the data schema?

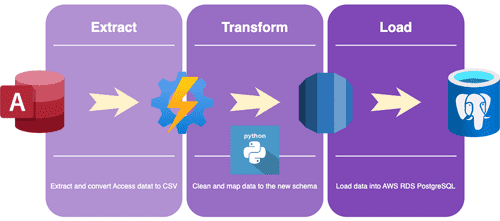

Over 15 years their old application had accumulated hundreds of thousands of rows of critical product, project, and sales data. Some tables had over 150k rows. Our plan was to create a script that would extract the old data from Microsoft Access, clean and transform it to fit the new schema, then load it into AWS RDBMS PostgreSQL. We automated as much as we could so that the day of the switchover to the new system business could resume as usual.

What We Did

We built a Python script to extract, clean, and transfer the data.

Extract:We used Python and used the pyodbc library that could connect to Access. With only one output format choice we extracted the Access data into CSVs.

Transform: We had the new database set up and knew ahead of time the data types to map to. We looked at the CSV data to get a feel for the data, to spot patterns and discrepancies. This led us to find many issues with their old data: values used instead of foreign key ids, and these relational values had typos, deleted records that had dependent rows of data.

We went through each table of the new database schema and wrote code to extract the data from the relevant CSVs. Some of the original table relationships were not by id but by value so we had to build the foreign key relationships between tables so that in the load step there wouldn't be foreign key constraint errors. To test if our rows of data conformed to the new schema we would upload to a local PostgreSQL database and used the errors to track down incorrect data. We did this until PostgreSQL did not throw an error.

Load: The final step of the script was to load the data to the remote database on AWS.

The result was a data migration script that could transfer the data to AWS with a one line command when they move to the new application.

Future

- One issue with CSV is it does not preserve data types. A potential fix for the next project would be to go from CSV to Parquet so we know exactly what data types we are dealing with.

- We think with a library like SQLAlchemy we could explicitly define the data types for data going into PostgreSQL and catch errors that way instead of relying on catching errors loading into a local PostgreSQL database.

If you are facing a data migration away from Microsoft Access contact us today.